Memory Hierarchy in Semiconductors: From Cache to Storage Explained

Discover how modern processors balance speed, capacity, and cost through sophisticated memory hierarchies. From L1 cache running at CPU speeds to massive storage arrays, explore the engineering trade-offs that power computing performance.

Every time you open an app, stream a video, or run AI inference, you're relying on one of computing's most elegant solutions: the memory hierarchy. This sophisticated system balances the impossible triangle of speed, capacity, and cost by organizing memory into multiple tiers, each optimized for specific use cases.

From CPU registers operating at picosecond speeds to massive storage arrays measured in petabytes, understanding memory hierarchy is crucial for anyone working in semiconductors, system design, or performance optimization.

The Memory Hierarchy Pyramid

Level 1: CPU Registers

FastestCapacity

32-64 registers

Access Time

0.1-0.5 ns

Location

CPU core

Cost/Bit

Highest

Level 2: L1 Cache

Very FastCapacity

32-64 KB

Access Time

1-2 ns

Location

CPU core

Cost/Bit

Very High

Level 3: L2 Cache

FastCapacity

256KB-1MB

Access Time

3-7 ns

Location

CPU core/shared

Cost/Bit

High

Level 4: L3 Cache

ModerateCapacity

8-64 MB

Access Time

10-20 ns

Location

Shared across cores

Cost/Bit

Medium-High

Level 5: Main Memory (DRAM)

MediumCapacity

4-128 GB

Access Time

50-100 ns

Location

System memory

Cost/Bit

Medium

Level 6: Storage (SSD/HDD)

SlowestCapacity

256GB-100TB

Access Time

0.1-10 ms

Location

External storage

Cost/Bit

Lowest

Cache Architecture Deep Dive

Cache Design Principles

Temporal Locality

Recently accessed data is likely to be accessed again soon

Spatial Locality

Data near recently accessed locations is likely to be needed

Cache Lines

Data moved in fixed-size blocks (typically 64-128 bytes)

Cache Mapping Strategies

Direct Mapped

Each memory location maps to exactly one cache line

Set Associative

Memory locations can map to multiple cache lines in a set

Fully Associative

Any memory location can map to any cache line

Modern Cache Innovations

Victim Cache

Small buffer storing recently evicted cache lines to reduce conflict misses

Prefetching

Predictively loading data before it's requested based on access patterns

Smart Cache

AI-driven cache management adapting to workload characteristics

Memory Technologies Comparison

| Technology | Type | Access Time | Density | Power | Cost |

|---|---|---|---|---|---|

| SRAM | Static RAM | 1-10 ns | Low | High | Very High |

| DRAM | Dynamic RAM | 50-100 ns | High | Medium | Medium |

| 3D XPoint | Non-volatile | 100-300 ns | Very High | Low | High |

| NAND Flash | Non-volatile | 25-100 μs | Very High | Very Low | Low |

| HDD | Magnetic | 5-15 ms | Highest | Medium | Very Low |

Performance Impact of Memory Hierarchy

Cache Hit Rates

Access Time Comparison

Real-World Performance Impact

Performance gain from effective caching

Typical L1 cache hit rate

Speed difference: cache vs storage

Emerging Memory Technologies

Processing-in-Memory (PIM)

Integrates computation capabilities directly into memory chips, reducing data movement and improving performance for AI workloads.

High Bandwidth Memory (HBM)

Stacked DRAM with extremely high bandwidth (up to 1TB/s) for GPU and HPC applications.

Persistent Memory

Non-volatile memory with near-DRAM performance, bridging the gap between memory and storage.

Neuromorphic Memory

Memory technologies that mimic brain synapses, enabling ultra-low power AI processing.

Memory System Validation Challenges

Validating complex memory hierarchies presents unique challenges, from cache coherency verification to performance characterization across different workloads.

Key Validation Areas:

- • Cache coherency protocols

- • Memory controller functionality

- • Bandwidth and latency characterization

- • Power consumption optimization

- • Error correction and reliability

- • Multi-core memory contention

Testing Approaches:

- • Synthetic memory benchmarks

- • Real-world workload simulation

- • Stress testing and corner cases

- • Power and thermal validation

- • Cross-platform compatibility

- • Long-term reliability testing

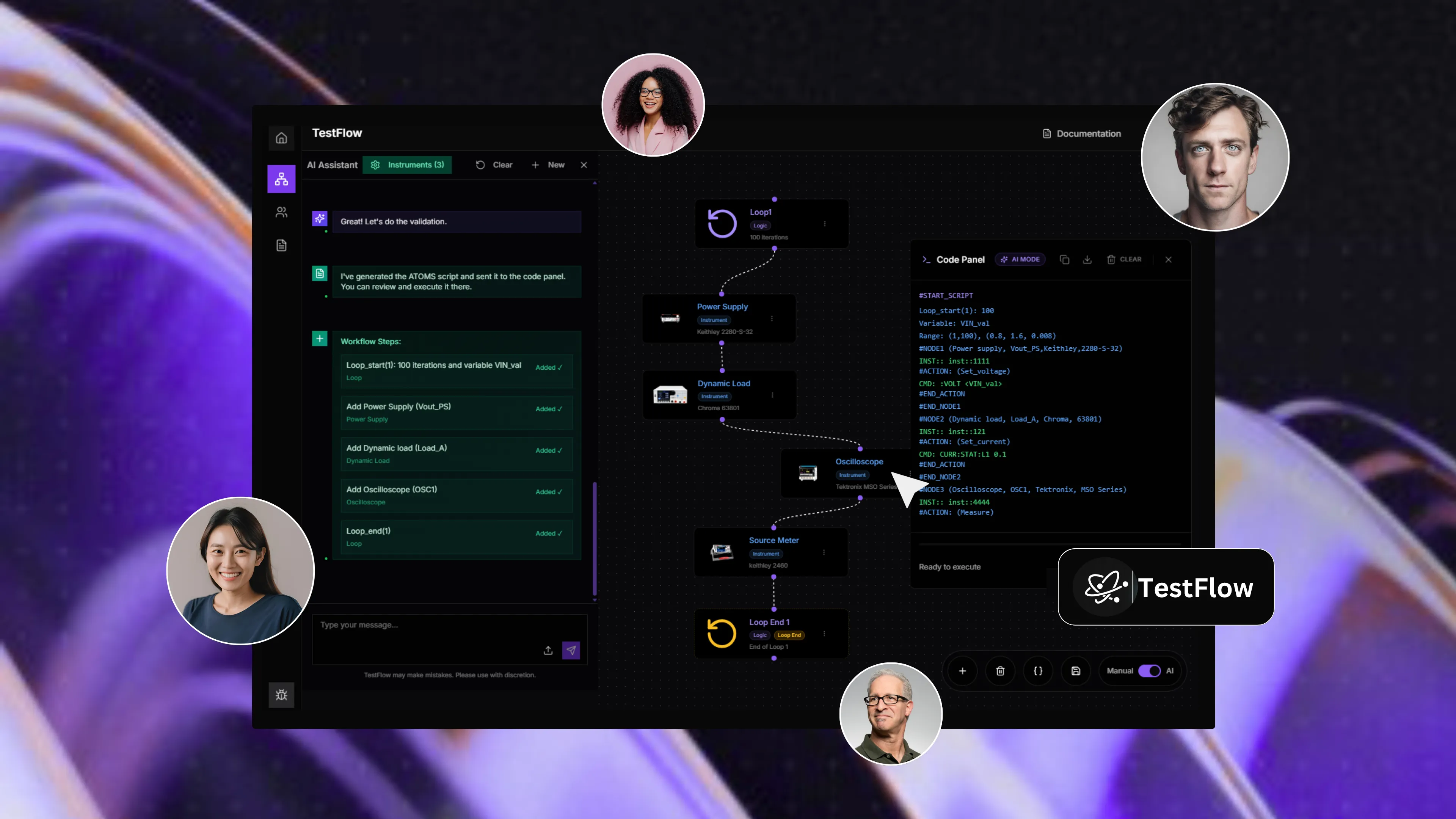

TestFlow for Memory System Validation

Advanced validation platforms like TestFlow provide comprehensive memory system testing, from cache behavior analysis to full-system performance characterization, helping ensure optimal memory hierarchy performance across all operating conditions.

Learn About Memory ValidationThe Future of Memory Hierarchy

Memory hierarchy design continues to evolve rapidly, driven by the demands of AI workloads, edge computing, and the need for ever-greater performance per watt. As we approach the physical limits of traditional scaling, innovative approaches like processing-in-memory and neuromorphic architectures are opening new possibilities.

The key to future memory systems will be intelligence—adaptive hierarchies that learn from usage patterns, predictive prefetching algorithms, and memory controllers that optimize for specific workloads in real-time. Understanding these principles is crucial for anyone designing or validating modern computing systems.

Whether you're optimizing cache performance, designing memory controllers, or validating complex memory hierarchies, the fundamental trade-offs between speed, capacity, and cost will continue to drive innovation in this critical area of semiconductor design.

Optimize Your Memory System Performance

Complex memory hierarchies require sophisticated validation to ensure optimal performance. TestFlow's AI-powered platform provides comprehensive memory system testing and characterization capabilities.